For years, the IT industry has been balancing efficiency with security. Efficiency can be gained by relying on 3rd party technology to outsource everything from cloud servers to security tools. But an integration with a vendor can compromise a carefully prepared secure environment. This white paper analyzes the risk of the ‘Software Agent’ model versus effective network segmentation.

Software agents, once hailed as the epitome of automation, have revealed themselves as significant security liabilities. They persist within networks as an untested, uncontrolled and unmonitored program. It is hard for me to understand how we could consider an environment that allows for this type of intrusion as secure. These agents, often operating with elevated privileges, have been a dominant architecture within the cybersecurity industry.

Moreover, the complexities of managing and securing software agents are substantial. It is now painfully obvious that even the most well funded cybersecurity tools are struggling to maintain effective change management processes. The vulnerabilities inherent in agent-driven automation were starkly illuminated by the recent CrowdStrike outage. Systems once considered secure were compromised due to the ability of the endpoint protection software agents to execute code with absolute independence. The repercussions of such breaches are catastrophic, resulting in billions of dollars in damages and widespread disruptions to critical services.

The key to cybersecurity in third-party engagement is appropriate network segmentation. This is not a new concept but we’ve dropped the ball by allowing software agents to create short-term automation at the cost of a plethora of zero-day cybersecurity risks. By implementing network segmentation on top of HTTP/S retrieval systems, businesses will eliminate the threat software agents pose to their secure computing environments.

|

Feature

|

Software Agents

|

HTTP/S Retrieval Systems

|

|

Deployment

|

Installed within network

|

External server-based

|

|

Attack Surface

|

Larger due to persistent presence

|

Smaller due to external processing

|

|

Management Complexity

|

High, requiring ongoing updates and maintenance

|

Lower, with centralized control

|

|

Data Security

|

Vulnerable to internal threats and data breaches

|

Enhanced protection through encryption and external processing

|

|

Performance

|

Can impact system performance

|

Minimal performance impact

|

A basic secure computing environment

A common computing environment will allow software and systems to be accessed by the appropriate users. This environment is designed to be within our control creating a secure environment. We like this form of secure environment because we have a defined surface area, a defined trust process and a defined threat response.

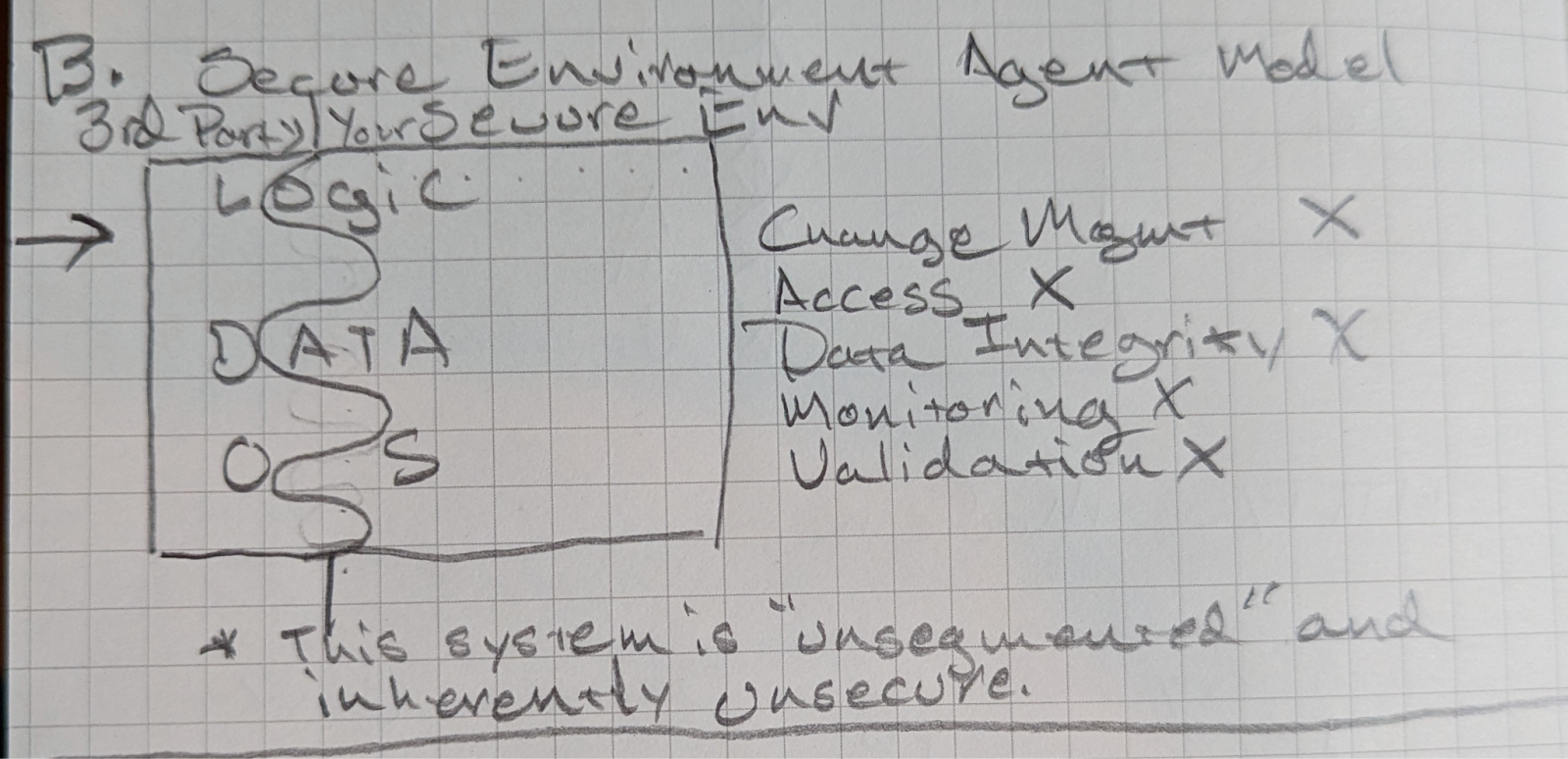

Implementation of a security agent

When we deploy a 3rd party agent that performs automation we compromise our secure environment. Because a third party agent has the ability to execute any command, from a third party, we no longer have a secure environment. As an example of how pervasive this problem is, a recent study by the Stanford Empirical Security Research Group was conducted on browser extensions in the Chrome Webstore. They found “that Security-Noteworthy Extensions (SNE) are a significant issue: they pervade the Chrome Web Store for years and affect almost 350 million users.” (https://doi.org/10.48550/arXiv.2406.12710).

As you can see from the diagram a security agent is an invader into our secure environment. It can act with almost complete immunity. As an example the software agents in the Chrome Web Store, aka browser extensions, have the power to collect text from logins and insert new code into live web pages. The security vulnerabilities are staggering.

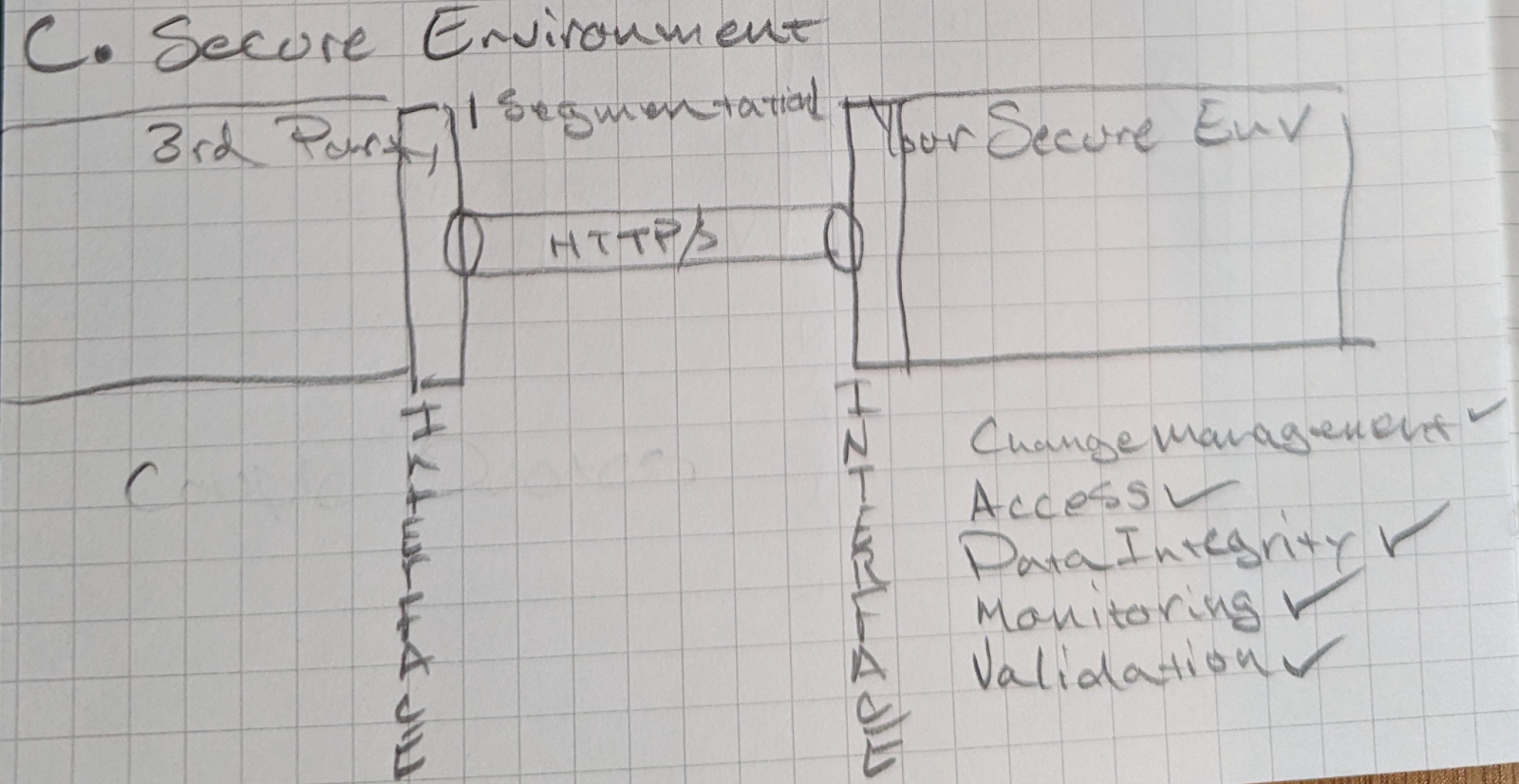

A network segmentation model

To securely operate with third party systems a network segmentation approach is required. This is not a new concept. In fact it is quite common. The most common method of network segmentation is encrypted transmission of data over HTTP/S. It has been hardened and battle tested for almost 30 years and literally runs the internet. By bringing back network segmentation we regain the ability to control our secure environment.

This architecture allows us to regain control over our secure environment. Because we implement an integration that must be intentionally deployed by both parties. This creates an extra layer of security to the integration.

The HTTP/S retrieval advantage

HTTP/S retrieval systems offer a fundamentally different approach to systems security in a networked world, they prioritize security in balance with efficiency. Unlike software agents that reside persistently within a network, HTTP/S systems fetch data from external servers using secure protocols, significantly reducing the attack surface.

Core principles of HTTP/S retrieval systems

By implementing effective network segmentation we are standing by some core principles identified for secure computing. These principles include:

- Zero Trust: Network segmentation eliminates the “trust” extended to software agents. Requiring any operations to remain within the boundaries of our network interface and system logic.

- “Least Privileges” Data Processing: Data access by either party is limited by the integration design and can only occur within separate environments increasing resilience.

- Secure Communication: Leveraging HTTPS ensures data is encrypted during transmission, protecting against interception and tampering.

- Reduced Attack Surface: By eliminating persistent agents, the number of potential entry points for malicious actors is significantly decreased.

- Monitoring with Boundaries: Network segmentation utilizing HTTP/S provides transparency to both parties to monitor their own internal systems and the boundary between the systems.

Security benefits of HTTP/S retrieval systems

- Enhanced Data Protection: Encryption can be an effective safeguard because we prevent unauthorized access and data breaches by having control over the rules within our system and the boundary of our integrations..

- Reduced Vulnerability Exposure: A software agent cracks open our carefully planned secure surface area. An HTTP/S integration with appropriate network segmentation eliminates the invisible vulnerabilities caused by a software agent.

- Improved Compliance: The National Institute of Standards and Technology (NIST) recommends network segmentation as a best practice to reduce cybersecurity risk. Network segmentation is a key component of the NIST CSF and is based on the NIST 800-171 control guidelines for "Systems & Communications" and "Security Assessment".

- Faster Incident Response: Isolated systems can be more easily contained and remediated in case of a security breach.

Network segmentation and HTTP/S retrieval systems offer a fundamentally different approach to our technical architecture. By fetching data from external servers using secure protocols, we regain control of our secure computing environments. The absence of persistent agents within the network significantly mitigates the risk of breaches and unauthorized access.

While software agents may offer certain levels of automation, the trade-off in security is simply too great. In a demonstration by a vendor the use of a software agent can seem “auto-magical” in that you’ve easily deployed a set of automations with minimal input from your team. However for the immediacy of deployment we trade control of effective practices and the ability to secure our computing environment. Of course for the vendor this is a much easier tool to create. They do not have to create explicit designs that you as a user can review. They can access data at their choosing without permission from you. They can infiltrate without oversight.

In creating true network segmentation, utilizing common patterns such as HTTP/S, we can control our computing environment. By adopting HTTP/S retrieval systems, organizations can have complete control over the data, access and functionality provided to third party vendors. At Strike Graph, we advocate for a paradigm shift towards network segmentation utilizing HTTP/S retrieval systems. By pivoting the focus to external data retrieval and leveraging the inherent security of HTTP/S, organizations can significantly bolster their defenses against cyber threats. Security vendors, GRC systems, and endpoint protection providers should be leaders in secure computing by not deploying “agent” style architectures.

The cybersecurity industry needs to review our own deployment architectures. Too many of our products are deployed as software agents, compromising our customers' systems. We should adopt the age-old guidance provided to medical professionals “Do No Harm”. Are you developing a solution that utilizes best security practices in your architecture? Those of us developing platforms and products should set an example of effective security by implementing integration and deployment architectures that keep our ecosystem safe.

.jpg?width=1448&height=726&name=Screen%20Shot%202023-02-09%20at%202.57.5-min%20(1).jpg)